Table of Contents

From the information age to the disinformation age

How we created the social dilemma

A change in the business model

The 3 main goals of social media companies

The Stanford Persuasive Technology Labogy Lab

Real-world applications, real-world consequences

The desire (or need) for constant social approval

The problem with the “it’s just the latest thing” narrative

Frankenstein algorithms that change themselves

Billions of different realities

Persuasion in the hands of bad actors

Actionable tips from those in the social dilemma:

Hiram's 3 Additional Tips for Navigating Our Social Dilemma

Overview

Netflix's latest docu-drama The Social Dilemma seeks to explore the ramifications of social media on our everyday lives. The documentary is critical in putting these real concerns in front of millions of Netflix subscribers, who, ironically, are a prime example of the documentary's subject matter: The fight for our attention through unethical product and technology design.

As a privacy advocate myself, I didn't know what to expect in terms of how social media and its users would be portrayed. I've previously written about the #DeleteFacebook dilemma, which seems to have been relatively short-lived, as these movements often are. Something I've come to learn the hard way is that people are quick to outrage, and quick to forget. Nevertheless, it's important that these conversations stay alive in order to bring about meaningful change.

This piece seeks to understand what exactly the social dilemma is, how we created this social dilemma, and what we can do to go about repairing it.

From the information age to the disinformation age

The documentary opens with a series of introductions by early employees at Big Tech companies. These are the pioneers of YouTube's algorithm, Facebook's 'like' button, monetization strategies, and everything that makes the products we're familiar with the way that they exist today.

Nothing vast enters the life of mortals without a curse. – Sophocles

Some begin by explaining that they initially believed the product they were building to be a genuine product for good.After the introductions, however, the documentary crew asks one simple question: "What is the problem?" Everyone is stumped, as no one has a direct answer to the question.

We weren't expecting any of this when we created Twitter over 12 years ago. – Jack Dorsey, Twitter Founder & CEO

There's a problem in the tech industry, but it doesn't have a name. Tristan, co-founder of the Center for Humane Technology and the main protagonist in the documentary, urges us to think about what is normal and what isn't. As we live through a time where we're constantly bombarded with breaking news, election interference, and a nonstop news cycle pertaining to things that aren't typically thought of as "normal." Why is this?

It's not an accident. We got here by design. Our devices, our apps, and the services we use every single day are intentionally engineered in a way that demand our attention 24/7. And we give it to them, willingly and unwillingly.

Billions of people use these services every day. Yet, it's only a handful of designers and engineers that end up becoming the puppet masters for these 2+ billion people because of the decisions that they make internally.

What is the social dilemma?

Like the tech insiders being interviewed in the documentary, it's tough for me as well to answer this question succinctly. I don't think it can be attributed to any one particular issue, but rather a web of issues that create a complicated strand that's difficult to turn away from. The best term to describe this issue would be surveillance capitalism.

Surveillance capitalism: Capitalism profiting off of the infinite tracking of everywhere everyone goes by technology companies whose business model is to make sure that advertisers are as successful as possible.

This marketplace didn't exist up until about a decade ago. This marketplace trades exclusively in human futures. At scale. These markets have produced trillions of dollars that have made today's internet companies the richest companies in the history of the world.

It's somewhat difficult to imagine because it's relatively new, despite it being incredibly pervasive. It's especially difficult to imagine and communicate it to people who are unaware about this subject because of its deeply embedded roots in our everyday lives. Surveillance capitalism has become the norm, and to discuss it oftentimes means uprooting the companies, technologies, and practices that we're so accustomed to on a daily basis.

How we created the social dilemma

A change in the business model

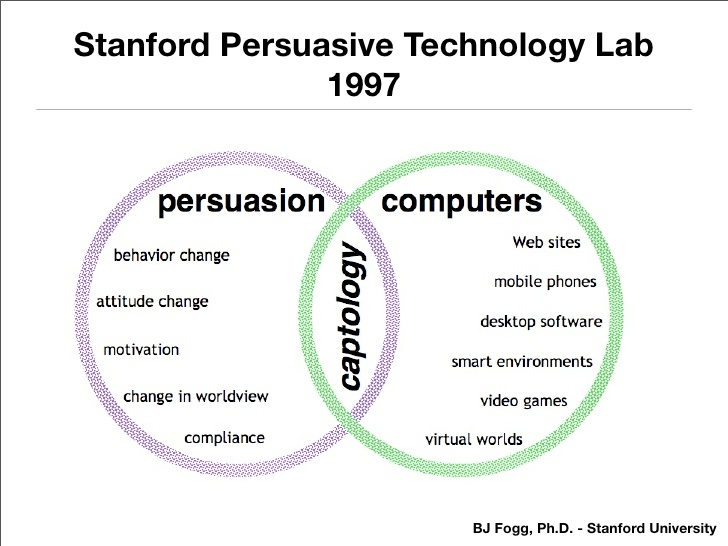

There has been a shift in the technology industry where tech, but particularly social networks, have been founded on the business model of selling access to user data and changing behaviors. In decades past, the tech industry built hardware, software, and sold them to customers. It was a straightforward, simple business. Now, the money making machine lies in our data and information.

Be honest: Have you ever stopped to think about how Facebook, Instagram, Snapchat, YouTube, Twitter, etc. keep the lights on? You are paying for it. It might not be evident because there's no recurring fee that you see on your credit card every month, but you pay for it every time you use the service. And in many instances, you're paying for these services even if you don't have an account or actively use the service. This is because platforms like Google and Facebook track you all over the web and create a profile for you, without you ever knowing.

(This is done through scripts and partnerships between data collectors, but the technicalities of how this information is collected is beyond the scope of this piece.)

Why do they do this? Usually to advertise to you.

You might be thinking, "Big deal. Who cares if someone wants to serve targeted ads to me?" Big Tech absolutely loves hearing that, because you're simply helping their cause. People have actually said it to me both online and offline when I attempt to engage in a conversation about the importance of digital privacy and ethics. But the reality is that the reason is much more complicated, and much more sinister. The ultimate reason is actually to manipulate. This is where things get infinitely dangerous.

The classic saying is, "If you're not paying for the product, then you are the product." Unfortunately, even this saying has become far too simplistic.

It's the gradual, slight, imperceptible change in your own behavior and perception that is the product. - Jaron Lanier, Founding Father of Virtual Reality / Computer Scientist

Jaron Lanier emphasizes throughout the documentary that this change in your own behavior is the true product, and the only possible product. Big Tech can only make money from your behavior. From changing what you do, based on what they want you to do. It's slight, but over time, and across 3B+ people, the delta in change is bigger than anyone could imagine.

From an advertiser's perspective, this product is not just a gold mine, it's the gold mine. All of the people you could ever hope to reach live on these platforms, regardless of whether they have an account with the service or not. It certainly helps if they do, because then you can rack up infinitely more data (and with ultimate precision), but think about how insane it is that our most private information is being sold to the highest bidder in order to change our behaviors.

As you might imagine, this requires tons of data. So much data, in fact, that literally everything you do is tracked:

- What you look at

- What you don't look at

- How long you look at it

- Where you mentally are

- Where you physically are

- Where you zoom in on a picture

- Who you interact with the most/the least

- Why you interact with certain people, and when

Any sort of inquiry, demographic, or psychographic that an advertiser has can be answered because the data for it already exists. Your likes, dislikes, fears, interests, personality types, you name it. It's already being harvested.

This leads us into modeling.

Models and algorithms

It's not in Facebook's business interest to actually give up the data that they mined and harvested, but rather to create models that get increasingly better at predicting our actions and behaviors, with the ultimate objective to change those actions and behaviors.

All this data gets fed into a unique profile, or model, that is reflective of who you are. Once you have this model, every single datapoint that can be tracked gets fed into this model to make better predictions about you, your sentiments, and your behaviors. Over time, this model keeps improving, to the point where the algorithms at these companies know you better than any other person in your life. Even those closest to you.

The 3 main goals of social media companies

At social media companies, there are three distinct goals:

- Engagement: Drive up your usage. Keep you scrolling, liking, commenting, and remaining active on the platform.

- Growth: Encouraging you to keep coming back and inviting your friends, and getting them to invite their friends, and so on.

- Advertising: Make sure that as growth and engagement are happening, advertising revenue is maximized.

Each of these goals are powered by algorithms whose job is to figure out what to show you in order to keep those numbers going up.

Tim Kendall, former executive at Facebook, says that they talked about Mark Zuckerberg having ultimate control over these goals, or dials. Need to keep more users engaged during the election this time around? Turn the dial up on that one. Need more advertising revenue this week? Crank up that dial. If this doesn't frighten every single person on the face of this earth, I don't know what will. Not only because it's the sort of controls that we'd see in a sci-fi movie, but because Facebook has faced a myriad of ethical and digital privacy issues since its inception that demonstrate they are not equipped to puppeteer such specific control over 3 billion people.

Shows and movies often portray the bad guys as being violent and malicious, but what happens when these bad actors are in the shadows? What happens when we're being manipulated, but we don't even know we're being manipulated? Where do we go from there? We love to idealize and think about the future, but that's essentially where we're at right now. And we, as a collective species, don't even realize it.

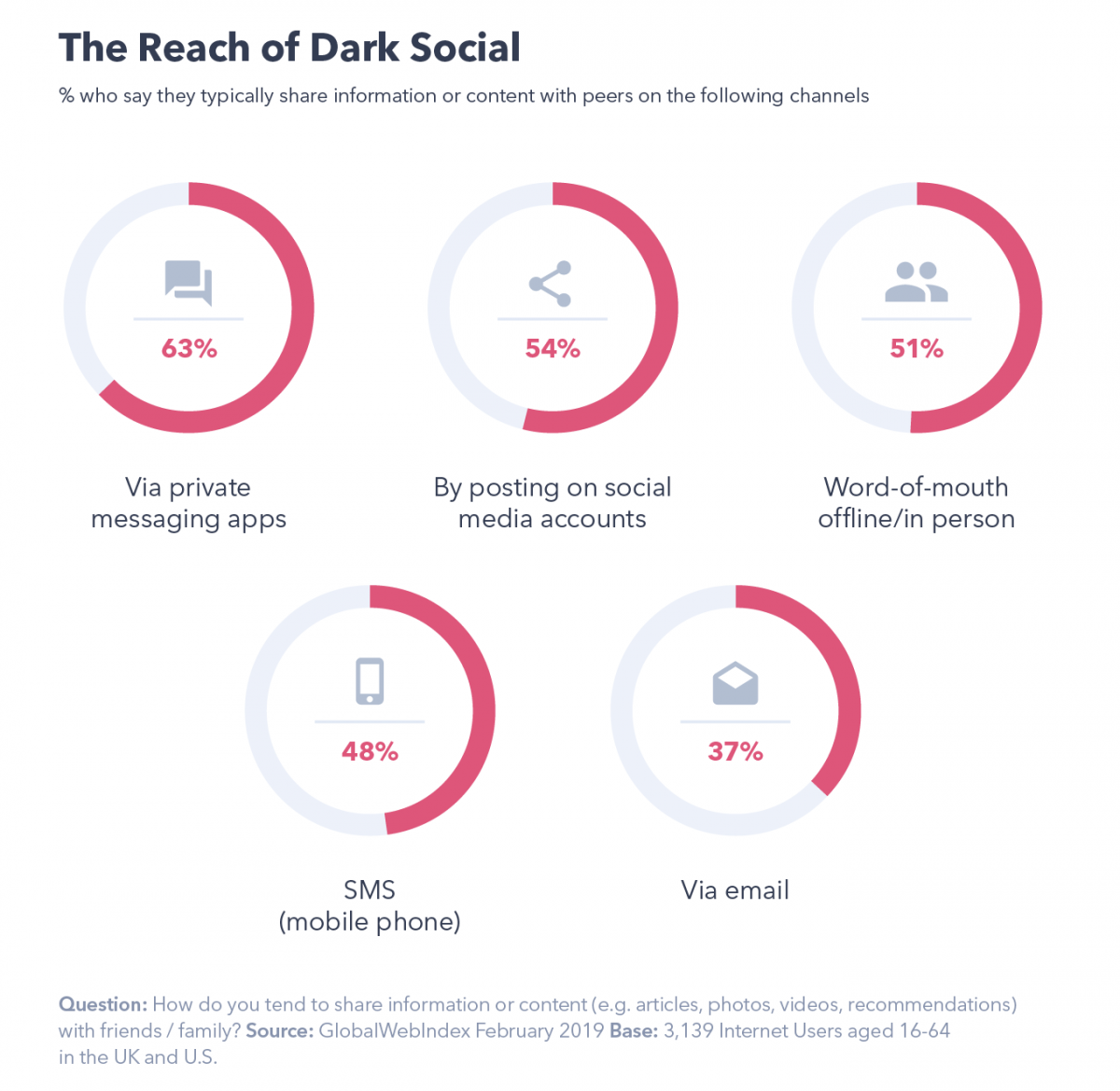

The primary form of connection and communication in today's world is digital. So even though billions of people are linked together, the only reason they're connected is because of a third party advertiser.

We've created a world in which online connection has become primary. Especially for younger generations. And yet, in that world, any time two people connect, the only way it's financed is through a sneaky third person who's paying to manipulate those two people. So we've created an entire global generation of people who are raised within a context where the very meaning of communication, the very meaning of culture, is manipulation. We've put deceit and sneakiness at the absolute center of everything we do. - Jaron Lanier, Founding Father of Virtual Reality / Computer Scientist

For as long as we put deceit and sneakiness at the center of everything we do, we won't be able to maneuver our way out of this. If this is how we operate on a daily basis, there is no motivation and no fundamental shift in the ground that incentivizes us to do it a different way. A better way.

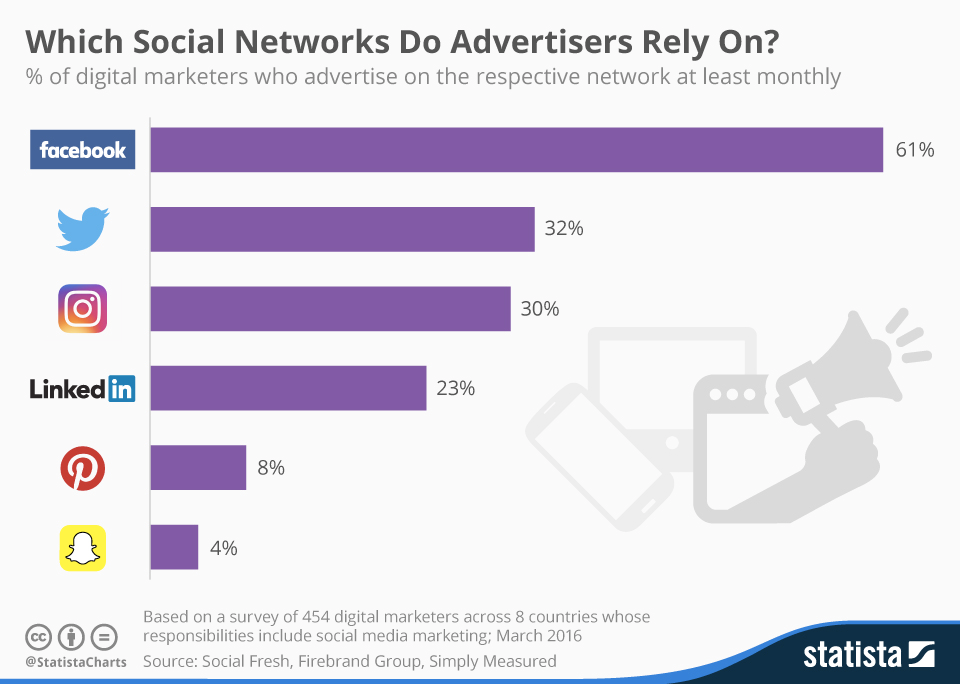

The Stanford Persuasive Technology Labogy Lab

The overarching question in Stanford's Persuasive Technology Lab is, "How could you use everything we know about the psychology of what persuades people, and build that into technology?" Many prominent figures in Silicon Valley went through this class, and it's a large part of the reason why there are positive intermittent reinforcements built into the technology that we use today.

Swipe down to refresh? New post. Post something new? Wait for the instant like. Comment something clever? Here are some reactions. Like something? Display a huge red heart over the post. Got tagged in a photo? Here's a notification to let you know which picture you got tagged in.

Positive intermittent reinforcement operates just like slot machines in Las Vegas: You don't know if you're going to get something, and you don't know when you're going to get something. This is where we see tools planting an unconscious habit of picking up your phone and checking the latest trends on Twitter, turning on notifications on your favorite influencers on Instagram, and literally living inside of your head rent-free. Well, in reality, we are the ones paying those platforms to live inside of our heads.

The sneaky notifications

Have you ever felt a vibration in your pocket where you usually keep your phone, only to check it and realize that there was no notification? That's what this is.

Furthermore, consider the fact that notifications almost never contain enough information about the notification itself. For example: Say you got tagged in a photo. Not only did you receive a push notification on your phone, but you also got sent an email notifying you about the tag. First, the email notification happens just so that you can be enticed back to your phone, where you most likely use the social media platform the most.

Notice how the notification doesn't show you the photo that you were tagged in. This too is to get you back on the platform, where the first post you'll see is something that's calculated to be the most engaging thing the platform's algorithm could present to you.

The platform could very easily show you the picture that you were tagged in, and that's it. Notification over. By not showing you what picture you were tagged in, the social media network is literally demanding that you open a new tab and log into the platform, where you'll inevitably get lost in everything else.

This is not a coincidence, this is by design. It's manipulation.

We're lab rats, but not even lab rats that are to be used for massive human good. We're not lab rats developing solutions to cure diseases. We're lab rats so that these platforms can figure out how to feed us more ads and change our behavior more effectively, because this results in more ad revenue.

Short story: I had a friend whose first post on her Instagram feed was always her crush, despite having really low (public) engagement rates with his content. This is because she was always looking at his posts and looking at his profile, despite never actively liking or commenting on his photos. Nevertheless, Instagram/Facebook knows that she is interested in him, and will keep feeding her his content as much as possible because it keeps her engaged and coming back to the platform.

Real-world applications, real-world consequences

Facebook discovered that they can affect real-world behavior and emotions without ever triggering the user's awareness. This is all about exploiting vulnerabilities in human psychology, without users knowing about it.

The inventors, creators... understood this... consciously. And we did it anyway. – Sean Parker, Former President at Facebook

Employees know it, executives know it, but most users don't. This gap that exists is, in large part, a reason why we're here today. People simply aren't aware about this. Billions of people don't realize what's happening behind funny animal videos and memes. Without this awareness, we can't even take the first step.

Social media isn't solely a tool waiting to be used. It has its own goals, and it has its own means of pursuing them by using your psychology against you. - Tristan Harris, Former Design Ethicist at Google and Co-Founder at Center for Humane Technology

There's a video of Steve Jobs talking about the computer. Steve Jobs describes computers as "bicycles for our minds."

Computers do in fact allow us to do incredible things, and at incredible rates of speed. But as with all technology, there are unexpected downsides that conflict with the upsides. Melvin's first law of technology states that, "Technology is neither good nor bad; nor is it neutral."

Social media, however, is not a tool. A tool waits to be used. And if it isn't used, it doesn't do anything. Social media operates on its own. It doesn't wait to be used. It actively nudges you, encourages you, persuades you, and keeps you coming back using a plethora of psychological tricks and hacks.

There are only two industries that call their customers 'users': illegal drugs and software. – Edward Tufte, Statistician and Professor Emeritus of Political Science, Statistics, and Computer Science at Yale University.

Even as the inventors of the infinite scroll, of addicting growth tactics, of YouTube's recommendation algorithm were working behind the scenes to create these addiction techniques and were fully conscious of it, they still weren't able to control themselves when it came to using their phone and these social media platforms.

We've gotten so far as to invent boxes with timers in them that don't allow us to access whatever's in them, simply because our own willpower is no longer working when it comes to containing ourselves and our social media addictions. Social media is literally a drug that releases dopamine the same way any other drug would.

The desire (or need) for constant social approval

We are constantly curating our lives on these social platforms because we want others to see how well we're doing. How amazing our lives are. It's the reason we get so-called influencers faking their travels and making up stories about how much fun they had hiking, biking, kayaking, whatever to the world (even when someone else is funding the lavish lifestyle).

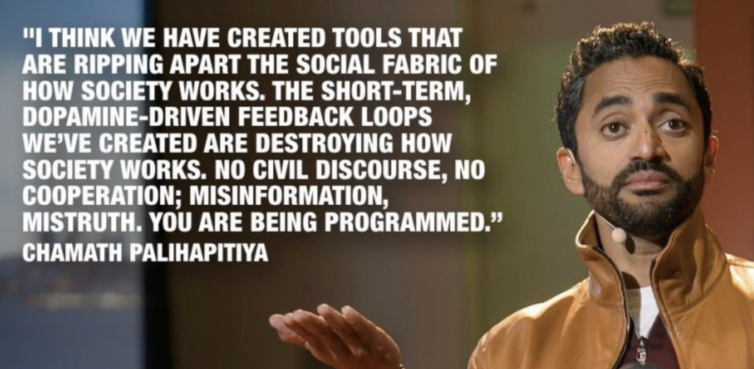

We conflate [vanity metrics] with value, we conflate that with truth... And instead what it really is is fake, brittle popularity that's short-term and that leaves you even more, and admit it, vacant and empty before you did it. Because then it forces you into this vicious cycle where you're like, "What's the next thing I need to do now? 'Cause I need it back.' Think about that compounded by 2 billion people, and then think about how people react then to the perceptions of others. It's really, really bad. – Chamath Palihapitiya, former VP of Growth at Facebook

We feel the need to be constantly validated with hearts, likes, comments, followers, and all these other vanity metrics because this is how these platforms are designed. Without that, there's no attention. And without attention, there's no ad revenue. There's no behavioral change that can be sold to the highest bidder.

The former VP of Growth at Facebook talks about the dangers of Facebook and social media as a whole.

It's plain as day to me. These services are killing people... and causing people to kill themselves. - Tim Kendall, former President at Pinterest

Though these claims seem drastic, statistics show an increase in anxiety, depression, and self-harm around teens and pre-teens. Numbers had remained constant up until about the last decade or so, when these platforms starting gaining massive popularity. I, personally, have come across people online disputing these statistics with no real evidence of their own--and I get it. Sometimes it's tough to admit that the platform you love--and sometimes more importantly--is making you a living, is causing real, prolonging harm.

It's difficult to admit, but the truth is the truth.

I recently saw a tweet of someone praising YouTube like no other because it's "free." It's not free, but the guy is a YouTuber, and he makes a living from it. So it's natural that he would see it as such, and I completely understand it. However, it's dangerous (and incorrect) to propel the idea that these platforms are free.

As an indie maker myself, I love the fact that you can create content in today's world and get paid for it. I love that. I encourage that. What I'm not crazy about is how the majority of that revenue is being financed. Therefore, we have to be responsible about how we communicate these platforms to others and how we approach those conversations as they relate to these platforms.

The problem with the “it’s just the latest thing” narrative

The argument that we'll just "adapt" to it the same way that we adapted to newspaper, or the radio, or the television, simply cannot be used. This is a whole new beast. And perhaps the reason that this narrative is still alive is because that was the narrative perpetuated when the radio was supposed to kill the newspaper, and when the TV was supposed to kill the radio. But it's simply not the case here. Literally nothing comes close to the rate at which technology has increased. Computer processing power has gone up about a trillion times, while our brains, our physiology, have not evolved at all.

And communicating that to people, let alone people at scale, is incredibly difficult. Not just because it takes a certain level of technical knowledge to actually understand how these technologies work, but because platforms and outlets are constantly demanding and fighting for our attention, that we don't prioritize this as something we can change.

Some people dismiss it. Others see the importance of this, but don't feel strongly enough about it to actually change their behavior in a way that helps them (and in passing, everyone else). I know this through personal experience. To the average person, I'm a person who might be wearing a tin-foil hat.

I'm not that extreme, but I do feel strongly that when privacy—which is an inalienable human right—is not being protected, we have a moral responsibility to take action. When we think about sweatshops and modern day slavery, those are infringements on human rights. When it comes to genocide, those are infringements on fundamental human rights.

The fact that the internet is run by the largest and most powerful advertising agencies the world has ever seen in Google, Facebook, Twitter, etc., is an infringement on our fundamental human rights. We have no online privacy.

Looking for something online? They know what you searched and why you searched it. Shopping online? They know. Searching for an embarrassing medical symptom? They know. You literally go online, and they're tracking you. All around the web. Whether you're logged in or not, whether you have an account or not, it's all being harvested.

There are literally enormous rooms and warehouses with computers interconnected with each other, running extremely complicated programs, programs that even humans don't understand at this point (because they have evolved so much since inception).

Frankenstein algorithms that change themselves

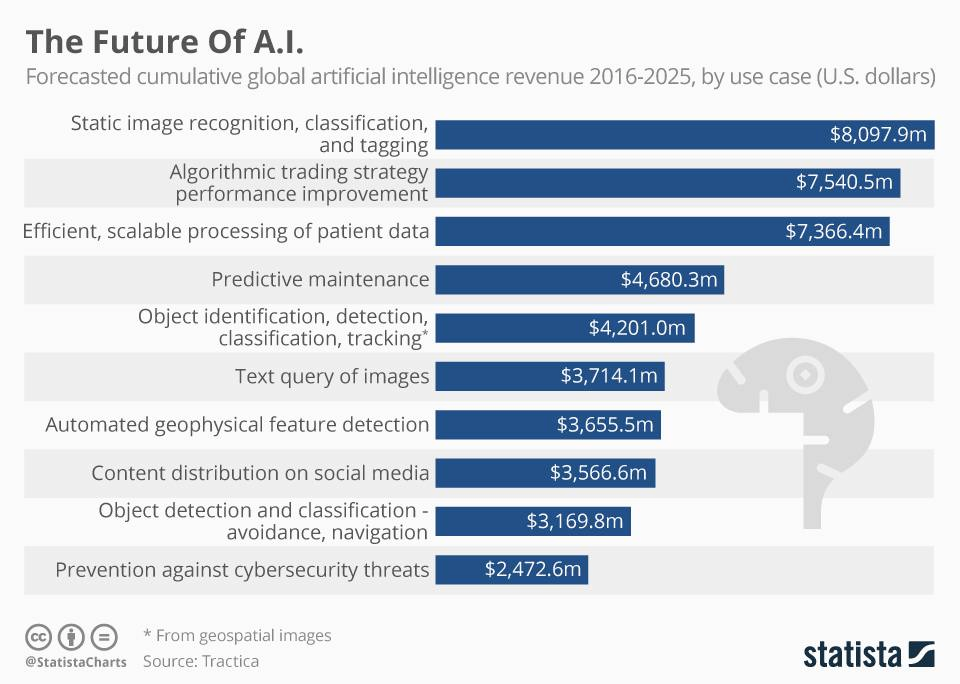

The terms Machine Learning and Artificial Intelligence are big buzzwords, but the fact of the matter is that these algorithms are so complex that they've evolved to be "intelligence." The whole point of ML/AI is to provide a better output. You give the algorithm the desired output, and the algorithm itself constantly learns how to do it. As it gets better at curing our news feeds and explore sections, we spend longer and longer on these platforms--exactly as designed.

The algorithm has a mind of its own, so even though a person writes it, it's written in a way that you kind of build the machine, and then the machine changes itself. – Bailey Richardson, early Instagram

The scariest part? No one really understands how these algorithms are working to do what they do.

In fact, Facebook had to shut down some of their AI chatbots after they started speaking to each other in a language the robots themselves had devised and no human could understand.

I like to say that algorithms are opinions embedded in code... and that algorithms not objective. Algorithms are optimized to some definition of success. – Cathy O'Neil, PhD Data Scientist

We are playing a game against artificial intelligence that we're not going to win. This AI can anticipate your next move in ways you can never imagine (but you've probably experienced). Have you ever thought of something, and then later saw an ad for it? That's not coincidental. That's by design. Some of these algorithms have gotten so good at modeling you, they can even predict what your next move (or purchase) is going to/should be.

Billions of different realities

When we search for the definition of a word in a dictionary, we all see the same definition. If you were to look up the word amazing, it would be the same definition that I see, which is, "causing great surprise or sudden wonder." Depending on which dictionary you use, you might get a slightly different variation of the definition. But generally, we can all agree that the word amazing means something similar to this definition--something that causes great surprise and/or sudden wonder. No matter how many ways you spin it, the definition of the word itself does not change.

Newsfeeds, on the other hand, do not have these set definitions. Each and every newsfeed is entirely different. There is no such thing as the same news feed. Ever. Think about that for a moment. In the years that social media has been alive, with its 3+ billion active users, no two people are seeing the same newsfeed at the same time. Ever.

Despite being in similar physical proximity to each other, and having similar interests, sharing friend groups, coworkers, etc., people are being fed entirely different news feeds.

It worries me that an algorithm that I worked on is actually increasing polarization in society. But from the point of view of watch time, this polarization is extremely efficient at keeping people online. - Guillaume Chaslot, former YouTube engineer

If I looked up the definition of amazing, and then you looked it up and it gave you a different definition that was completely different, or did not align with the general definition of what the word actually means, you'd tell me I was wrong. And I'd tell you I was wrong. And we'd probably tell each other to "look it up." But that's the thing--we can't even reliably instruct someone to "look it up" anymore, because we don't know what that other person is going to end up seeing when they do look it up. Chances are they might just end up being even more misinformed than they were before.

We must fix this. Our survival literally depends on it.

It's 2.7 billion Truman shows. Each person has their own reality, with their own facts. Over time, you have the false sense that everyone agrees with you, because everyone in your newsfeed sounds just like you. Once you're in that state, you're easily manipulated. – Roger McNamee, early investor in Facebook

Roger McNamee draws this newsfeed manipulation comparison to being manipulated by a magician, where it's a set-up. The magician tells you to pick a card, any card. The issue with is that it doesn't really matter which card you picked, because the cards are pre-set and pre-arranged in a way where no matter which card you pick, the magician's trick is still going to work on you, because you're just going to pick the card that the magician wants you to pick. That's how Facebook works.

Facebook, Instagram, Twitter, YouTube give you the illusion of choice. You pick your friends, pick your likes, pick your interests. But at the end of the day, who is curating your news feed? They are.

This is why personal and political polarization occurs. Each side is thinking: "How could they be so stupid? How do they not see the same information?" Well, they're not seeing the same information. That's the whole point.

It's easy to think that it's just a few stupid people who get convinced, but the algorithm is getting smarter and smarter every day. So today, they're convincing people that the earth is flat, but tomorrow they will be convincing you of something that's false. – Gillaume Chaslot, Former YouTube Engineer

One prominent example of these conspiracy theories is Pizzagate, which led to the arrest of a man after he showed up with a gun to a pizza place. The man said he was there to liberate trafficked people from the basement--the pizza place did not even have a basement.

It turns out that the social network's recommendation engine started recommending Pizzagate conspiracy content voluntarily when it detected that they might be into other conspiracy theories as well. This happened, despite people never having searched the term "Pizzagate" ever in their life.

Considering the fact that false information spreads six times faster than real news, what is to come of this? When we've created systems that bias towards false information because false information makes these platforms more money, what are we to do?

It's a disinformation-for-profit business model. – Tristan Harris

People now have no idea what's true, and it's literally become a matter of life and death. Specifically with COVID-19. This coronavirus has only exposed how severe this social dilemma is, but what about everything else that these disinformation campaigns are changing? What about the stuff that we don't see, that still has very real effects on the world around us? How do we find that information? And when it is found, and who is to sound the alarm on a global scale? And how are we to bring about actual change?

Persuasion in the hands of bad actors

Facebook is a persuasion tool, plain and simple. Sure, it might have those animal videos we love so much, but Facebook is for persuasion, first and foremost. For an authoritarian government, Facebook is the most effective tool there is.

Some of the most troubling implications of governments and other bad actors weaponizing social media is that it has led to real offline harm. – Cynthia M. Wong, Former Senior Internet Researcher at Human Rights Watch

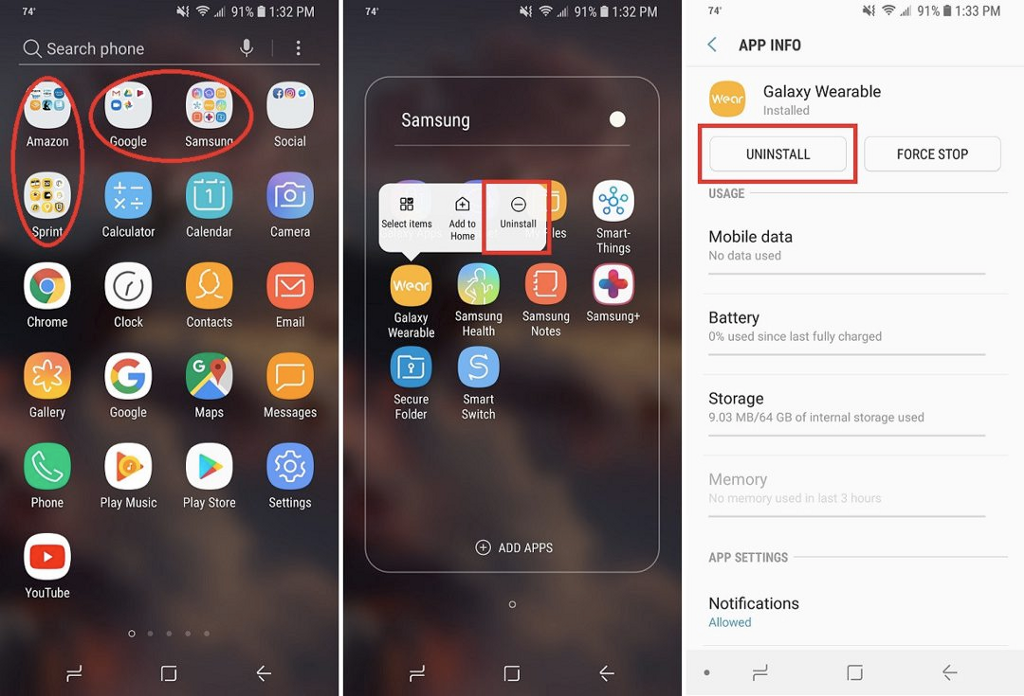

Cynthia cites Myanmar, where people equate "Internet" with "Facebook." One of the reasons this happens is because the mobile Facebook app comes pre-installed on people's phones when they're bought. This is commonly referred to as bloatware. As if that wasn't enough, an account is automatically created for the purchaser

You may have noticed that if you have a Samsung phone or an LG phone, those phones usually come with a ton of manufacturer-specific apps. This is bloatware. And most of the time, these apps are unremovable. Many phones also prevent Facebook from being deleted from the phone.

In Myanmar specifically, Facebook has created an extremely way to incite violence against Rohingya Muslims in the form of mass killings, mass burnings, mass rape, and other heinous crimes against humanity.

It's not that highly motivated propagandists haven't existed before, it's that the platforms make it possible to spread manipulated narratives with phenomenal ease, and without very much money. - Renée Diresta, Research Manager at Stanford Internet Observatory

One specific tactic Facebook uses to reach people at scale with such precision is something called "lookalike audiences." Lookalike audiences are people who have exhibited some form of behavior similar to someone else you have previously targeted. For example: If you wanted to reach conspiracy theorists in order to manipulate an election and create polarization, you might choose Facebook's lookalike audiences for people who are interested and susceptible to other conspiracy theories, because they're vulnerable.

An important thing to note here is that there is virtually no regulation as to who can do this. You don't have to have any sort of licensing or special permissions in order to do this. You, as an advertiser on Facebook, have the power to use Facebook in its persuasive form–just as intended.

Democracy is for sale

In a world where no one believes anyone, those of power and influence can manipulate another country without actually invading any physical borders. The warfare is now happening online. It's happening digitally, where we all are. And in an increasingly connected world, there's no safe havens.

We in the tech industry have created the tools to destabilize and erode the fabric of society in every country, all at once, everywhere. – Tristan Harris

Think about it — our entire lives are virtually online. Where do we chat? Where do we connect? How do we arrange to meet, even offline? And once we're together in person, what's happening. Someone is posting online about what's happening. There's an Instagram story, there's a Snapchat sent, a tweet about something else... we're publishing our lives online willingly, without thinking about the consequences of those posts at scale.

Likes, comments, follows, and DMs create dopamine hits in our brain that leave us demanding more.

It's not about the technology being the existential threat. It's the technology's ability to bring out the worst in society... and the worst in society being the existential threat. — Tristan Harris

Everyone is entitled to their own opinions, but everyone is not entitled to their own facts. Facts are facts for a reason. We need to have some shared understanding of reality, because a world without a shared understanding of reality is chaos. And in several instances, we've seen real-world examples of this. This "chaos" has no longer become a hypothetical. It's active every day, and it's brewing even more as time passes and nothing stands in the way of these platforms being checked.

What can we do about it?

In one of Mark Zuckerberg's testimonies in front of Congress, he suggested that the long-term solution is to build more AI tools that find patterns of people using the services that no real people would do. Whether you know a little or a lot about technology, I hope it's safe to assume that building more of the tools that created this problem is not the answer. Hearing this statement should be a huge red flag for anyone, regardless of where you sit or what you believe.

We are allowing the technologists as a problem that they're equipped to solve. That's a lie. People talk about AI as if it will know truth. AI's not gonna solve these problems. AI cannot solve the problem of fake news. – Cathy O'Neil, PhD Data Scientist

It's easy to dismiss the existential threat of these platforms, even as you watch this documentary, and you read about major ethical problems that exist within these platforms. Even consciously knowing, as you read this piece on your computer, or your phone, or wherever you might come across it, there still exists a disconnect between knowing about all of this and taking action. This is where the biggest challenge exists, in my opinion.

If we don't agree on what is true, or that there is such a thing as truth, we're toast. This is the problem beneath other problems, because if we can't agree on what's true, then we can't navigate out of any of our problems. – Tristan Harris

The race to capture people's attention is not going away. Attention is the currency and will continue to be the currency. But at what cost?

The goal is not to eliminate technology. Alex Roetter, former Senior VP of Engineering at Twitter, makes it clear that you can't put the genie back in the bottle. Even if Facebook, Twitter, Snapchat, Google, etc. were to all be destroyed overnight, the goals don't change. Because of the business model, and the pressure to grow revenue, engagement, and all the other metrics that increase shareholder value, remains.

Unfortunately, the bigger it gets, the harder it is for anyone to change.

It's confusing because it's simultaneous utopia and dystopia. — Tristan Harris.

Tristan believes the people at these platforms are trapped by a business model, an economic incentive, and shareholder pressure that makes it almost impossible to do something else. But let's make it clear that there's nothing wrong with companies making money. That's not the argument at all. The issue is that there is no regulation to monitor these companies in the way that they operate. There's no competition and no oversight to monitor these companies, which results in de facto governments that really have no one to answer to.

There's no financial incentive to change, which is the biggest problem when it comes to bringing about real change. Financial incentives run the world, so any solution to the social dilemma has to realign the financial incentives in a way where the ground shifts underneath these platforms. Platforms cannot, and should not, operate in a disinformation-for-profit type of model.

The phone company has tons of sensitive data about you, and we have a lot of laws that make sure they don't do the wrong things. We have almost no laws around digital privacy, for example. – Joe Toscano, Former Experience Design Consultant at Google

One of the solutions brought forth by Joe Toscano, former Experience Design Consultant at Google, is to tax companies on the data assets that they have. The reasoning behind this is that it gives them a fiscal reason to not acquire every piece of data on the planet, which is what they're currently doing.

The law runs way behind on these things, but what I know is the current situation exists not for the protection of users, but for the protection of the rights and privileges of these gigantic, incredibly wealthy companies. Are we always gonna defer to the richest, most powerful people? Or are we ever gonna say 'You know, there are times when there is a national interest. There are times when the interests of people, of users, is actually more important than the profits of somebody who's already a billionaire. — Roger McNamee

Shoshana Zuboff believes that these markets that undermine democracy and freedom should be outlawed altogether. She argues that this is not a radical proposal. After all, there are markets that are outlawed. Markets in human slaves, human organs, are all outlawed because they have "inevitable destructive consequences."

We live in a world where a tree is worth more dead than alive. We live in a world in which a whale is worth more dead than alive. For so long as our economy works in that way, and corporations go unregulated, they're going to continue... even though we know it is destroying the planet and leaving a worse world for future generations. What's frightening, and what hopefully is the last straw that will make us wake up as a civilization to how flawed this theory has been in the first place is to see that now we're the tree, we're the whale. — Justin Rosenstein, Former Engineer at Google and Facebook/Asana Co-Founder

Our attention can be and is mined. We're not profitable because we can become paper like a tree, or trafficked for our blubber as whale might, but we're profitable to corporations when we give them our attention, our information, our friends and family, our likes/dislikes, and everything in between. Corporations are outsmarting us, using all sorts of tools and obscure algorithms to figure out how to pry us away from our goals and objectives, and instead be laser focused on building our presence on their platforms, so that they can charge more to advertisers for our attention, information, and ultimately manipulate our behaviors.

We can demand that these products be designed humanely. We can demand to not be treated as an extractable resource. The intention could be: How do we make the world better? – Tristan Harris

Something that's important to think about is that this situation is not set in stone. Technology is built a certain way because we designed and engineered it to be that specific way. And because of that, we also have the power to change it. The problems that currently exist on the internet and within social media specifically are not problems that can never be fixed. They can, and they should be. The social dilemma is so pervasive and powerful, that the danger lies in not taking action towards fixing these issues, rather than worrying about shifting the ground underneath these incredibly powerful platforms.

Throughout history, every single time something's gotten better, it's because somebody has come along to say, 'This is stupid. We can do better.' It's the critics that drive improvement. It's the critics who are the true optimists. – Jaron Lanier, Founding Father of Virtual Reality/Computer Scientist

Conclusion

Social media has been weaponized. A small number of the most powerful corporations in the history of the world run it, and we're being manipulated. Due to poor ethical decisions, companies are trapped in a disinformation-for-profit business model that exploits users and non-users alike. Our data and information is being mined, harvested, and sold around the world.

It's going to take a miracle to get us out of this situation, with the miracle being: collective will.

Actionable tips from those in the social dilemma:

- Turn off notifications.

- Talk openly and honestly about this problem. Voice your opinion.

- Have open conversations about not just successes, but failures so that someone can build something new (and better).

- Apply and demand massive public pressure to reform these companies and their business models.

- Don't use their services.

- Instead of using Google's search engine, use Qwant, DuckDuckGo, or StartPage.

- Never accept a video recommended to you on YouTube.

- Use browser extensions to remove recommendations (but be sure to check the permissions of these extensions).

- Before you share, fact-check, consider the source, do the extra search into it. If it seems like it's something designed to push your emotional buttons, it probably is.

- Vote with your clicks. If you click on clickbait, you're creating a financial incentive that perpetuates this existing system. Don't contribute to this.

- Make sure that you get lots of different kinds of information.

- Talk to people that disagree with you so that you're exposed with different POVs.

- Little to no screen time for kids.

- Kids' rules:

- All devices out of the bedroom at a fixed time every night.

- No social media until high school.

- Work out a time budget with your kid(s).

- Encourage friends and family to delete social media accounts. This matters a lot, because this creates the space for a conversation where people are free from the manipulation engines and are not bound by the manipulation engines.

Hiram's 3 Additional Tips for Navigating Our Social Dilemma

Stop using these services wherever and whenever possible

Many people are forced to use these services because their employers run their business on these services. If this is you, I understand that you're limited while you work. However, no one is forcing you to use those services outside of work. So maybe you're forced to work on G Suite, but you don't need to have a personal Gmail account. Use a privacy-friendly email provider.

Don't use company terminology

Don't call it a "Zoom" call.

It's a video chat/call.Don't tell someone to "Google" it. Tell them to "search" it instead. Or simply "look it up."

The language we use matters more than we think.

Use platform alternatives

It's not about getting rid of social media altogether. The concept of social media itself is great for meeting people and staying connected with the globe. But there are platforms not built on a problematic business model. Use those instead, and refuse to join those that are built on an unethical business model and/or have poor privacy practices.

Here are some alternatives to mainstream social media:

Facebook ➡️ MeWe, Diaspora, Friendica

Twitter ➡️ Mastodon

Instagram ➡️ PixelFed

If you have any more thoughts on the social dilemma and how to minimize its harm on us, I'm all ears. Reach out to me:

- Web: hiram.io

- Rising Tide (blog): hiram.io/blog

- LinkedIn: @hiramfromthechi

- Twitter/X: @hiramfromthechi

- Medium: @hiramfromthechi

- Mastodon: @hiramfromthechi@mastodon.social